- Industries

Industries

- Functions

Functions

- Insights

Insights

- Careers

Careers

- About Us

- Technology

- By Omega Team

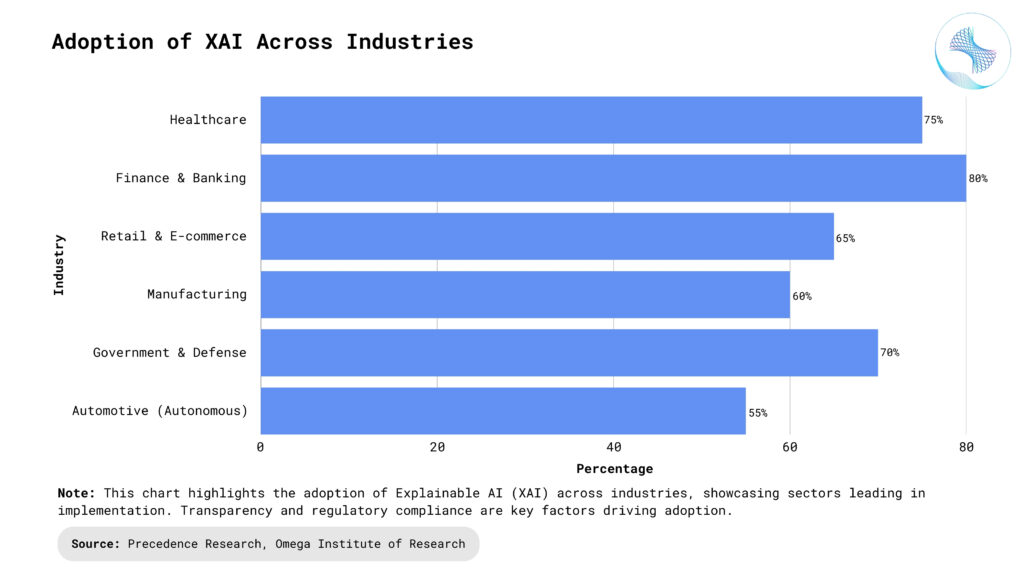

Artificial Intelligence (AI) is transforming industries like healthcare, finance, autonomous vehicles, and cybersecurity by optimizing operations and enhancing decision-making. However, as AI systems become more complex, their decision-making processes often lack transparency, raising concerns about trust, fairness, accountability, and regulatory compliance. Explainable AI (XAI) addresses these issues by making AI models more interpretable, allowing users to understand and trust AI-driven outcomes. XAI enhances ethical AI deployment, mitigates biases, and ensures compliance with evolving regulations. By providing insights into AI decision-making, it empowers data scientists, business leaders, and regulators to audit and refine AI systems effectively. As AI adoption grows, explainability becomes crucial for responsible and trustworthy implementation. This article explores the significance of XAI, its methodologies, and its role in shaping the future of AI.

What is Explainable AI (XAI)?

Explainable AI (XAI) refers to a set of techniques and methodologies that make AI decisions more understandable to humans. XAI aims to provide insights into how AI models arrive at their conclusions, allowing users to interpret results, detect biases, and ensure fairness in AI-driven decision-making. Unlike traditional black-box AI models, XAI provides justifications for its predictions, making AI systems more accessible and practical for real-world applications. The goal is to build trust and accountability, ensuring that AI-based decisions align with human values and ethical standards. XAI is particularly crucial in high-stakes industries like healthcare, finance, and autonomous systems, where explainability can impact regulatory compliance and user adoption. By improving transparency, XAI helps organizations refine AI models, mitigate risks, and enhance decision-making processes. As AI continues to evolve, integrating explainability into AI development will be key to fostering responsible and ethical AI innovation.

The Critical Role of Explainability in AI

Trust and Transparency: One of the biggest challenges in AI adoption is the lack of trust in AI-generated decisions. Many AI models, particularly deep learning systems, operate as “black boxes”—providing accurate predictions but without clear reasoning. XAI makes AI more transparent, allowing users to understand why certain decisions were made. This fosters greater trust among businesses, consumers, and regulators, ensuring AI’s responsible and ethical use. Moreover, transparent AI systems encourage collaboration between humans and AI, enhancing confidence in automated decision-making. Increased trust also leads to higher acceptance rates of AI-driven solutions across different industries.

Regulatory Compliance: Governments and regulatory bodies across industries are enforcing stricter rules to ensure that AI-driven decisions are explainable and fair. Regulations such as the General Data Protection Regulation (GDPR) in Europe and the Health Insurance Portability and Accountability Act (HIPAA) in the U.S. require AI-driven decision-making processes to be interpretable. Compliance with such regulations helps businesses avoid legal issues and ensures ethical AI deployment. Additionally, organizations that prioritize explainability gain a competitive edge by demonstrating responsible AI use, enhancing their reputation. As regulatory landscapes evolve, companies investing in XAI will be better prepared for future compliance challenges.

Bias and Fairness: AI models can unintentionally learn and propagate biases present in training data, leading to unfair or discriminatory outcomes. Explainability helps organizations identify and mitigate these biases, ensuring fairness in AI systems. For instance, in hiring algorithms or loan approvals, XAI can reveal whether biased factors (such as gender or ethnicity) are influencing decisions, allowing for corrective actions. By proactively addressing biases, businesses can create more equitable AI systems that align with diversity and inclusion initiatives. This also reduces the risk of reputational damage and legal consequences associated with biased AI models.

Debugging and Model Improvement: AI developers often struggle to optimize and debug AI models, particularly deep learning networks with millions of parameters. XAI techniques help identify incorrect predictions, analyze model weaknesses, and improve overall performance. This leads to better model refinement and optimization, ensuring reliable and robust AI applications. Understanding how AI models behave under different scenarios allows developers to fine-tune algorithms more effectively. Moreover, enhanced debugging capabilities lead to faster deployment cycles and reduced costs associated with AI development and maintenance.

User Adoption and Satisfaction: AI systems are increasingly used in consumer-facing applications, such as chatbots, recommendation engines, and virtual assistants. If users do not understand why an AI system is making specific recommendations, they may be reluctant to trust or use it. XAI helps bridge this gap by providing clear explanations, enhancing user experience, and driving wider AI adoption. When users feel informed and in control, they are more likely to engage with AI-powered tools and services. This ultimately improves customer retention, brand loyalty, and the overall perception of AI-driven products.

Techniques for Explainable AI

XAI methodologies can be broadly classified into two categories: intrinsic and post-hoc explainability.

Intrinsic Explainability

These models are designed to be interpretable by default. They prioritize clarity over complexity, making it easier to understand their decision-making process. This ensures that stakeholders can easily trace and validate AI-generated decisions without requiring complex analytical tools. Intrinsic models are particularly useful in regulated industries, where transparency and accountability are critical. Examples include:

- Decision Trees: These hierarchical models follow a structured, rule-based approach, making their decisions easy to trace and interpret. Their step-by-step nature helps users understand exactly how an outcome was reached, ensuring transparency in decision-making. Decision trees are widely used in healthcare, finance, and risk assessment due to their straightforward structure.

- Linear Regression: A statistical method that assigns weights to input features, clearly showing their impact on predictions. By analyzing the coefficient values, users can determine which factors most influence the outcome, improving interpretability. This technique is frequently used in economic forecasting, medical research, and marketing analytics.

- Rule-Based Models: Use predefined conditions to make decisions, ensuring transparency and ease of interpretation. These models are commonly used in expert systems and regulatory compliance tools, where predefined logic must be followed. Rule-based approaches are highly effective for applications requiring strict adherence to predefined policies or ethical guidelines.

Post-hoc Explainability

For complex AI models like deep neural networks, post-hoc methods provide interpretability after a model has been trained. These techniques help uncover the reasoning behind AI-generated predictions, enabling users to build trust in the system’s decisions. Post-hoc explainability is crucial for AI applications in high-stakes domains such as autonomous vehicles, medical diagnostics, and fraud detection. Common techniques include:

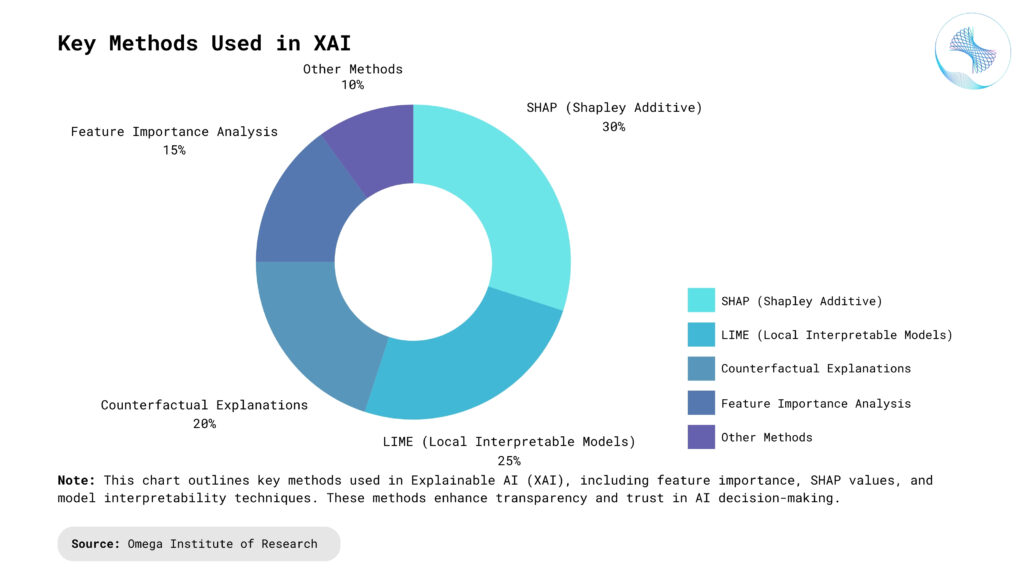

- Feature Importance Analysis: Identifies which features (input variables) had the greatest influence on a model’s output. This helps businesses and researchers understand which factors drive AI decisions, improving model refinement. Feature importance analysis is widely used in credit scoring, healthcare diagnostics, and customer segmentation.

- SHAP (Shapley Additive Explanations): Uses cooperative game theory to fairly distribute feature importance scores among inputs, explaining AI predictions quantitatively. By assigning contribution values to each feature, SHAP provides a detailed breakdown of AI decision-making. This technique is widely used in financial risk assessment, medical AI, and automated decision support systems.

- LIME (Local Interpretable Model-agnostic Explanations): Generates simpler, interpretable models that approximate the complex AI model’s behavior in specific cases. By creating locally interpretable explanations, LIME allows users to validate AI decisions on a case-by-case basis. This is particularly beneficial in legal and healthcare applications, where explanations need to be understandable by non-technical users.

- Saliency Maps and Grad-CAM: Visualization tools used in image-based AI models to highlight which areas of an input image influenced the decision. These techniques are widely used in medical imaging, facial recognition, and autonomous driving to enhance interpretability. By visually representing model focus, they help ensure AI decisions align with human intuition.

- Counterfactual Explanations: Illustrate “what-if” scenarios by showing how a decision would change if input variables were altered. This approach helps users understand the reasoning behind AI decisions and explore alternative outcomes. Counterfactual explanations are particularly useful in lending, hiring, and legal applications, where understanding alternative scenarios is critical.

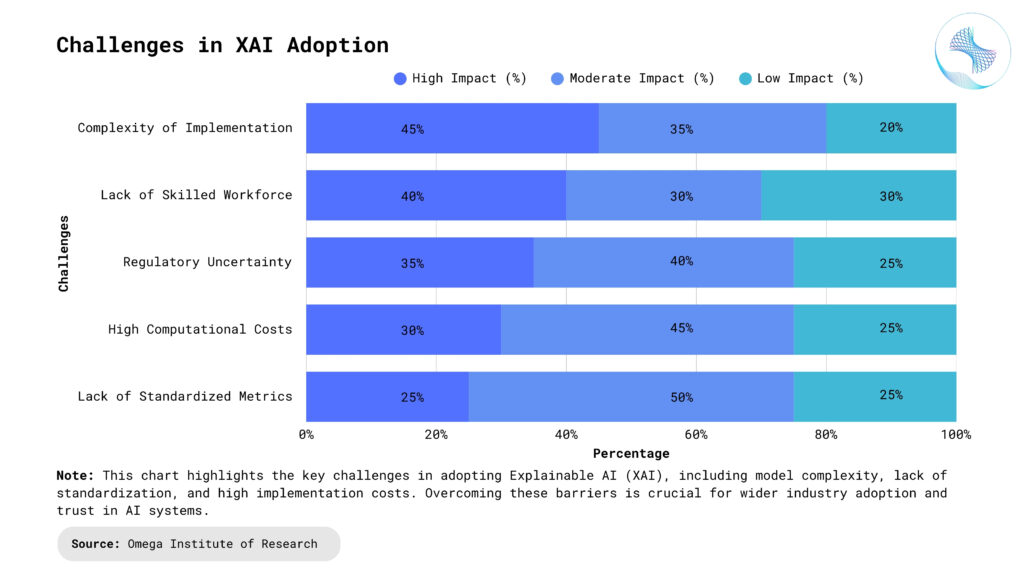

Challenges in Implementing XAI

Trade-off Between Accuracy and Explainability: Interpretable models, such as decision trees and linear regression, are easier to explain but may lack predictive power compared to deep learning models. On the other hand, highly accurate AI models, like deep neural networks, are often difficult to interpret. Balancing accuracy and explainability is a significant challenge for AI researchers. Organizations must carefully choose between simpler models that offer transparency and complex models that deliver higher accuracy. Moreover, industry demands often require both performance and explainability, making it crucial to develop hybrid approaches that optimize both aspects. Researchers are actively working on new techniques, such as explainability-aware training, to mitigate this trade-off.

Scalability: Some XAI techniques require extensive computational resources, making them difficult to implement in real-time applications. For instance, SHAP and LIME can be computationally expensive when applied to large datasets. As AI models scale, the cost of generating explanations increases, leading to performance bottlenecks. Real-time AI applications, such as fraud detection and autonomous vehicles, require instant decision-making, making scalability a major concern. Developing lightweight and efficient XAI methods that maintain interpretability without sacrificing speed is a key research focus in AI development.

Domain-Specific Interpretability: What is considered explainable varies across industries. A medical diagnosis AI must provide explanations that doctors can understand, while a financial fraud detection system needs to present insights relevant to auditors. Adapting XAI methods to different domains is a challenge. Domain-specific knowledge is required to translate AI decisions into meaningful and actionable insights for end-users. Additionally, industry regulations and compliance requirements dictate different levels of interpretability, further complicating the implementation of standardized XAI techniques. Developing customizable XAI frameworks that align with industry needs is essential for broader adoption.

Security and Privacy Risks: Exposing AI decision-making processes can inadvertently reveal sensitive information, such as proprietary business logic or personally identifiable information. There is also a risk that adversarial attackers could manipulate models if they gain too much insight into their workings. Striking a balance between transparency and security is critical to prevent unintended data exposure. Additionally, explainable models can be exploited by attackers to reverse-engineer AI behavior, making systems more vulnerable to adversarial attacks. Organizations must implement robust security measures to protect AI systems while maintaining a level of explainability that ensures trust and compliance.

Applications of XAI

Healthcare

Medical Diagnosis: XAI enables doctors to understand AI-generated diagnoses, improving decision-making and patient trust. Transparent AI models help explain why certain conditions are diagnosed, reducing skepticism in AI-driven healthcare. This is especially crucial in radiology and pathology, where AI assists in identifying diseases from medical images.

Drug Discovery: AI-driven drug development benefits from explainability by providing insights into how potential compounds are identified. Researchers can validate AI-generated hypotheses, ensuring that drug candidates are selected based on meaningful scientific principles. This enhances collaboration between AI specialists and pharmaceutical experts, accelerating innovation.

Treatment Recommendations: XAI ensures that AI-driven treatment plans are understandable and justifiable for doctors and patients. By revealing the reasoning behind suggested treatments, medical professionals can confidently integrate AI insights into their decision-making process. This also aids in personalized medicine, where AI tailors treatments to individual patients.

Finance

Credit Scoring: Lenders can explain why a loan was approved or denied, ensuring transparency and fairness. Borrowers can better understand the factors influencing their creditworthiness, reducing disputes and increasing confidence in financial institutions. Regulators also benefit from XAI, ensuring compliance with fair lending practices.

Fraud Detection: AI systems that detect fraudulent transactions can provide explanations to investigators for better case resolution. By understanding why a transaction was flagged, fraud analysts can assess cases more efficiently and reduce false positives. This improves fraud prevention strategies and enhances customer trust in financial security.

Algorithmic Trading: AI-driven trading strategies can be made more interpretable, allowing financial analysts to validate and adjust models. XAI helps traders understand why a particular buy or sell decision was made, reducing the risk of blindly relying on automated systems. This is particularly critical in volatile markets where AI-based trading systems need to justify rapid decisions.

Autonomous Vehicles

Decision Justification: XAI helps engineers and regulators understand why a self-driving car made a particular decision in a given situation, improving safety. Transparency in AI decisions ensures accountability in case of accidents or legal disputes. Regulators can also use explainable models to create better policies for autonomous driving.

Obstacle Detection and Avoidance: Explainability in perception systems allows developers to analyze how self-driving cars recognize obstacles and avoid collisions. By understanding how AI interprets road conditions, engineers can improve model accuracy and reduce potential errors. This is critical for ensuring safer navigation in dynamic environments.

Passenger Safety and Comfort: XAI helps autonomous vehicle manufacturers fine-tune AI behaviors to prioritize safety and passenger experience. For instance, explaining why a car slowed down or changed lanes helps passengers feel more confident in AI-driven decisions. This is essential for widespread adoption of self-driving technology.

Cybersecurity

Threat Detection: XAI enhances security systems by explaining why certain activities were flagged as suspicious, helping cybersecurity analysts respond effectively. By making AI-driven threat assessments more transparent, organizations can better understand attack patterns and refine their security measures. This is especially useful in detecting zero-day threats, where explainability aids in rapid incident response.

Intrusion Prevention: Explainable AI helps IT teams understand how AI models identify and block malicious activities, ensuring that cybersecurity measures remain robust. Understanding why a security alert was triggered prevents unnecessary disruptions while improving overall system protection. This is particularly useful in industries like banking and healthcare, where security breaches have severe consequences.

Compliance and Risk Management: XAI ensures that AI-driven cybersecurity solutions meet regulatory and compliance standards. Organizations can provide clear justifications for security actions taken by AI, making audits more efficient. This is crucial for industries subject to data protection laws, such as GDPR and HIPAA, where security transparency is mandatory.

The Future of Explainable AI

As AI adoption grows, regulatory bodies will likely enforce stricter transparency requirements. Future trends in XAI include:

Hybrid AI Models: Combining interpretable models with deep learning to achieve both accuracy and explainability. Researchers are exploring techniques like neural-symbolic learning, where symbolic reasoning complements deep learning’s predictive power. This approach allows AI models to balance performance with transparency, making them more applicable in high-stakes industries. Additionally, hybrid models enable real-time adjustments, improving AI adaptability in dynamic environments.

Regulation-Driven XAI: Governments and industry regulators pushing for standardized AI transparency frameworks. As AI becomes integral to decision-making in finance, healthcare, and law enforcement, regulatory bodies will demand clearer justifications for AI-driven outcomes. Organizations will need to incorporate explainability into AI governance strategies to meet compliance requirements. Standardized explainability metrics may emerge, providing a universal benchmark for AI transparency and accountability.

Advancements in Natural Language Explanations: AI systems generating human-readable explanations for their decisions, making AI more accessible to non-technical users. Future XAI models will leverage large language models (LLMs) to convert complex AI reasoning into easy-to-understand narratives. This will bridge the gap between technical AI developers and business users, enabling more effective decision-making. Additionally, interactive AI explanations will allow users to ask follow-up questions, refining their understanding of AI-driven insights.

Conclusion

Explainable AI (XAI) is crucial for fostering trust, improving model reliability, and ensuring ethical AI deployment. By integrating explainability techniques, businesses and researchers can harness AI’s power while maintaining accountability, transparency, and fairness. As technology evolves, XAI will play an essential role in making AI more accessible and user-friendly across industries, ensuring that AI serves humanity responsibly and effectively. Regulatory frameworks will continue to push for greater transparency, compelling organizations to adopt XAI-driven approaches. Advancements in AI explainability will not only enhance decision-making but also reduce risks associated with biased or opaque models. Ultimately, prioritizing XAI will lead to more responsible, trustworthy, and human-aligned AI systems.

- https://www.ibm.com/think/topics/explainable-ai

- https://www.techtarget.com/whatis/definition/explainable-AI-XAI

- https://ieeexplore.ieee.org/document/10134984

- https://paperswithcode.com/task/explainable-artificial-intelligence

- https://www.nature.com/articles/s41746-023-00751-9

Subscribe

Select topics and stay current with our latest insights

- Functions