- Industries

Industries

- Functions

Functions

- Insights

Insights

- Careers

Careers

- About Us

- Information Technology

- By Omega Team

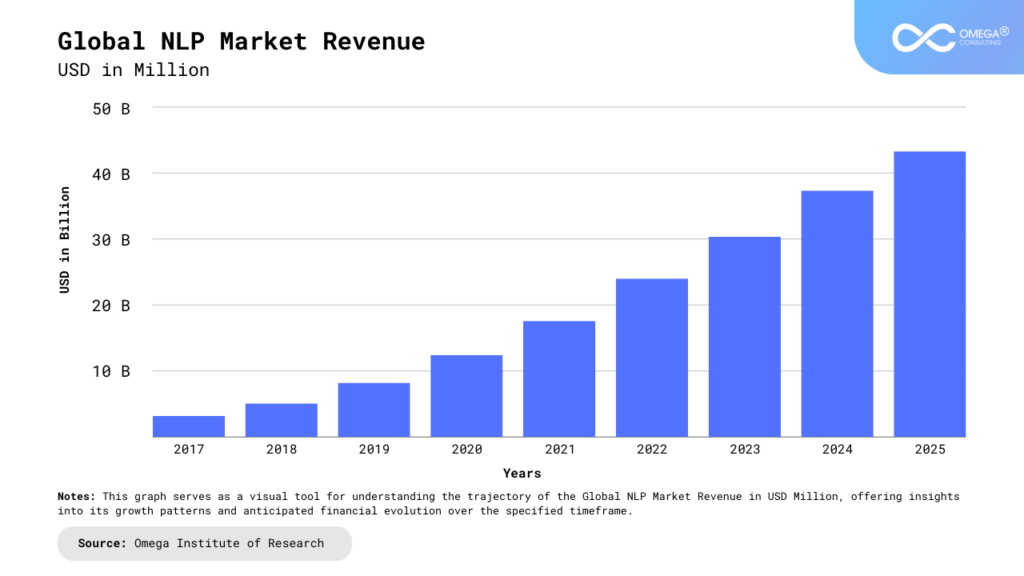

The dynamic convergence of natural language processing (NLP) and artificial intelligence (AI) has brought about a revolution in recent years, chiefly fueled by the introduction of advanced language models. Among these models, Large Language Models (LLMs) have risen to prominence as exemplars of linguistic excellence, showcasing an unmatched ability to understand and generate text that mirrors human output. This article embarks on an in-depth exploration of the vast domain of LLMs and their smaller counterparts, aptly named Tiny LLMs.

The Rise of Language Models

Historical Context

To fully grasp the current landscape, a retrospective examination of the historical evolution of language models within the realm of NLP is essential. The journey from rule-based systems to statistical models has been characterized by continuous innovation. This historical context sets the stage for comprehending the quantum leap facilitated by modern language models.

From early attempts at rule-based language processing to the advent of statistical models, the NLP landscape has witnessed a progression marked by leaps in sophistication. The transition from rigid rule-based systems to models that could learn from data marked a significant shift, laying the groundwork for the emergence of advanced language models.

Significance Across Applications

The significance of language models extends across a multitude of applications, permeating critical sectors such as healthcare, finance, and communication technologies. Their unique ability to decipher and generate human-like language has catalyzed advancements in various areas, including sentiment analysis, chatbots, and translation services.

In healthcare, language models aid in parsing and understanding vast amounts of medical literature, contributing to diagnostic processes and medical research. In the financial sector, these models play a pivotal role in analyzing market trends, making predictions, and automating financial tasks. Communication technologies leverage language models to enhance natural language understanding in virtual assistants and voice-activated systems, transforming the way humans interact with technology.

Introduction to LLMs

In the expansive tapestry of AI capabilities, LLMs emerge as focal points of innovation and progress. Fueled by massive datasets and sophisticated neural architectures, these models have transcended traditional limitations, showcasing an unprecedented linguistic aptitude and contextual awareness.

Large Language Models, epitomized by GPT-3.5, are at the forefront of this linguistic revolution. Trained on diverse datasets encompassing vast linguistic nuances, these models exhibit an understanding of context, tone, and intricacies comparable to human language processing. The neural architectures underpinning LLMs enable them to generate coherent and contextually relevant text, making them versatile tools across an array of applications.

In the subsequent sections of this article, we delve deeper into the evolution, applications, and considerations surrounding both LLMs and their smaller counterparts, Tiny LLMs. As technology advances, the synergy between human language and artificial intelligence is becoming increasingly seamless, promising a future where language models play an integral role in shaping how we interact with and harness the power of AI.

Large Language Models (LLMs)

Definition and Overview

Defining LLMs:

Large Language Models (LLMs) are sophisticated neural network architectures meticulously crafted to process and understand natural language. Beyond their sheer size, these models possess a profound understanding of contextual nuances embedded within language data. They stand as exemplars of the intersection between artificial intelligence and natural language processing.

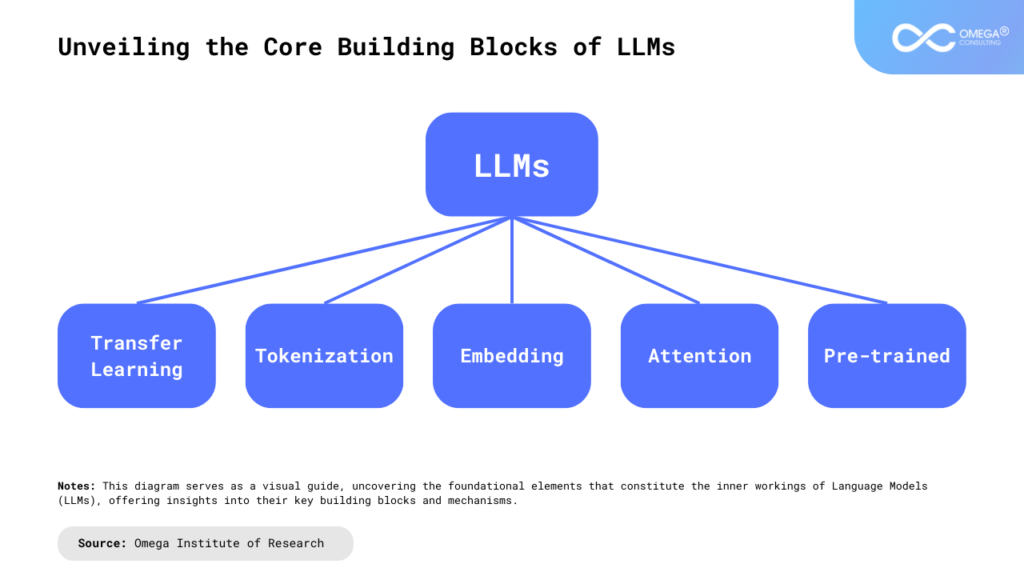

Key Architectural Components:

The cornerstone of numerous LLMs is the Transformer architecture. This section delves into the intricacies of Transformers, shedding light on self-attention mechanisms and positional encoding as pivotal elements contributing to the model’s ability to discern relationships within language data. These components enable LLMs to capture and understand the intricate patterns inherent in diverse linguistic expressions.

Training Process: Pre-training and Fine-tuning:

The journey of an LLM unfolds in two fundamental stages: pre-training and fine-tuning. This section unravels the specifics of each stage, elucidating how LLMs acquire a comprehensive understanding of language. Pre-training involves exposing the model to vast amounts of diverse data, enabling it to learn the intricacies of language while fine-tuning tailors the model to specific tasks or domains.

Transformer Architecture

Detailed Exploration:

A deeper dive into the Transformer architecture unveils its inner workings, elucidating how attention mechanisms enable the model to focus on relevant parts of the input sequence. This pivotal aspect of language comprehension empowers LLMs to capture the nuances and dependencies in data, leading to more robust linguistic understanding.

Self-Attention Mechanisms and Positional Encoding:

Understanding the mechanics of self-attention mechanisms and the incorporation of positional encoding provides valuable insights into how LLMs maintain a holistic view of language. These mechanisms allow the model to preserve context and coherence, addressing the challenges posed by the sequential nature of language data.

Real-world Applications:

The practical applications of the Transformer architecture are myriad. Examining instances where LLMs have been deployed effectively, such as in natural language understanding tasks, reinforces the real-world impact of this groundbreaking architecture. From chatbots to summarization tasks, the Transformer architecture has reshaped how machines interpret and generate human-like text.

Applications of LLMs

Natural Language Understanding

Sentiment Analysis:

LLMs’ prowess in discerning sentiment in text data is explored, with real-world examples showcasing the applications and implications of sentiment analysis in various domains. From customer feedback analysis to social media monitoring, LLMs contribute to a nuanced understanding of user sentiments.

Named Entity Recognition:

LLMs excel in identifying and classifying named entities within text, exploring the nuances of their performance and potential applications. This includes applications in information extraction, entity linking, and data structuring.

Language Translation:

The transformative impact of LLMs on language translation tasks is examined, with an emphasis on breaking down language barriers and fostering global communication. LLMs have played a pivotal role in automating and improving the accuracy of translation services, facilitating cross-cultural communication.

Content Generation

Creative Writing:

The generative capabilities of LLMs extend to creative writing. There are instances where these models have been employed to craft compelling narratives and creative content. From poetry to short stories, LLMs showcase their ability to mimic and augment human creativity.

Automatic Code Generation:

The practical applications of LLMs in the domain of code generation are investigated, highlighting the potential for automating coding tasks and streamlining software development processes. LLMs can assist developers in generating code snippets, enhancing productivity in the coding environment.

Ethical Considerations in Content Generation:

As LLMs become proficient content creators, ethical considerations surrounding bias, accuracy, and responsible content generation come to the forefront. The ethical implications of automated content creation, emphasize the importance of ethical guidelines and oversight in AI-driven content generation.

In the subsequent sections, we will further explore the nuanced landscape of Tiny LLMs, their applications, and the considerations that come with their integration into diverse business scenarios.

Tiny LLMs

Introduction to Tiny LLMs

Motivations Behind Development:

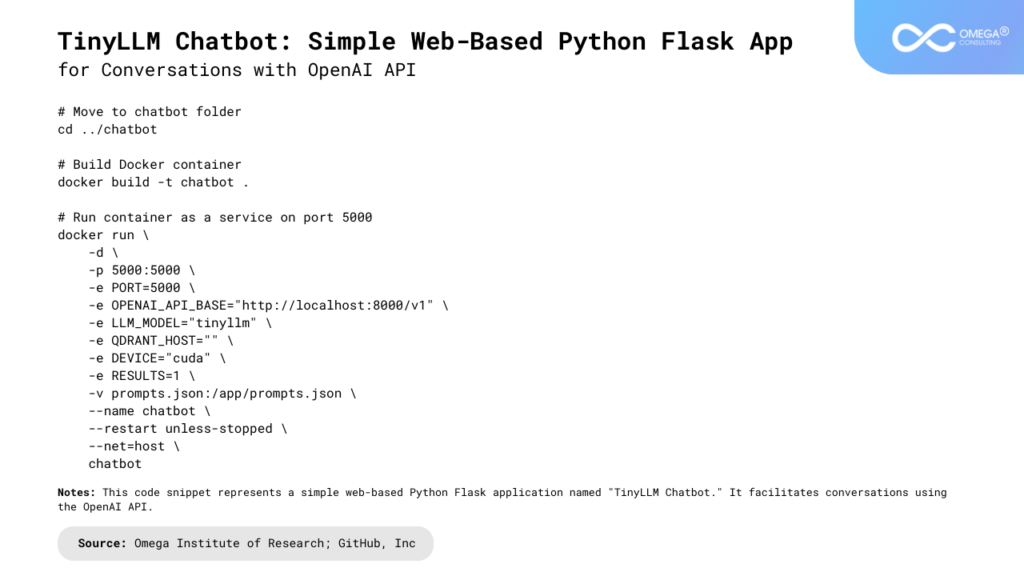

The inception of Tiny LLMs is rooted in the imperative need for more compact, resource-efficient models. The motivations driving their development unfolded against challenges that demanded a paradigm shift towards agile alternatives. As the demand for deploying language models on edge devices, smartphones, and other resource-constrained platforms grew, developing Tiny LLMs became essential to democratize access to advanced language processing.

Definition and Characteristics:

Defining the characteristics that distinguish Tiny LLMs from their larger counterparts is crucial in understanding their unique contributions. The notable reduction in model size and computational requirements sets Tiny LLMs apart, making them more agile and suitable for diverse applications. These models are crafted to deliver optimal performance while accommodating the constraints of resource-limited environments.

Comparative Analysis:

A comparative analysis between Large and Tiny LLMs serves as a bridge, connecting the macrocosm of expansive language models to the microcosm of their smaller variants. This analysis unveils trade-offs and advantages, highlighting how Tiny LLMs, while sacrificing some scale, offer efficiency and accessibility, particularly in scenarios where computational resources are scarce.

Advantages of Tiny LLMs

Reduced Computational Requirements:

This segment delves into techniques employed to optimize the computational efficiency of Tiny LLMs. By reducing computational requirements, these models become well-suited for deployment on resource-constrained devices. The discussion spans applications in edge computing, smartphones, and the Internet of Things (IoT), expanding the reach of language processing capabilities.

Faster Inference:

Examining the implications of faster inference times for Tiny LLMs in real-time applications reveals the potential impact on sectors such as healthcare, finance, and robotics. The ability to perform quicker inference contributes to enhanced responsiveness and efficiency in applications where timely decision-making is critical.

Challenges and Considerations

Model Compression:

Navigating through the intricacies of model compression techniques, this section explores the delicate balance between reducing the size of Tiny LLMs and maintaining acceptable performance levels. Techniques such as pruning and quantization are discussed in the context of optimizing model size without compromising functionality.

Knowledge Distillation:

Knowledge distillation emerges as a critical aspect of transferring the expertise acquired by large models to their smaller counterparts. The unique challenges in maintaining language proficiency in more compact models are explored, shedding light on strategies to distill knowledge effectively without sacrificing accuracy.

Future Directions and Implications

The Evolution of Language Models

Anticipated Developments:

Envisioning the future of language models involves exploring anticipated developments in model architectures. This includes advancements in neural network design, architectural innovations, and the continuous refinement of algorithms that drive the evolution of language processing capabilities.

Innovations in Training Techniques:

As technology progresses, so do training techniques. This section delves into potential innovations that could shape the training methods of future language models. Techniques such as transfer learning and meta-learning are discussed, paving the way for more efficient and adaptable models.

Integration of Multimodal Capabilities:

The integration of multimodal capabilities, where models can process and understand various forms of input including text, images, and audio, is a frontier that promises to redefine the scope of language models. Explore how this integration could lead to more contextually aware and versatile language models.

Ethical Considerations

Addressing Bias and Fairness:

The ethical dimensions of language models come to the fore, with a focused exploration of efforts to address biases and ensure fairness in the development and deployment of LLMs. Strategies for mitigating bias and promoting fairness in training data and algorithms are discussed to foster equitable AI systems.

Responsible AI:

Fostering responsible AI practices becomes imperative in the realm of language models. This section navigates through the principles and practices that underpin responsible AI, including transparency, accountability, and interpretability, to ensure that language models are developed and utilized ethically.

Public Awareness and Policy Implications:

The broader societal impact of LLMs necessitates public awareness and thoughtful policy considerations. This section investigates ongoing efforts to raise awareness about the capabilities and limitations of language models. It also explores potential policy implications and regulations governing the responsible use of language models to address societal concerns.

Conclusion

In conclusion, the journey from the expansive realm of Large Language Models to the compact domain of Tiny LLMs symbolizes a paradigm shift in the field of NLP. As we traverse the intricate landscapes of these models, it becomes increasingly imperative to balance the marvels of technological advancement with the ethical considerations that underpin responsible AI. The future of language models holds promises of exciting possibilities, and comprehending their nuances is pivotal in navigating the ever-evolving landscape of artificial intelligence. The journey continues, inviting researchers, developers, and enthusiasts alike to delve deeper into the marvels of language models, forging a path toward a more intelligent and ethically sound future.

- https://www.ibm.com/blog/open-source-large-language-models-benefits-risks-and-types/

- https://www.e2enetworks.com/blog/comprehensive-list-of-small-llms-the-mini-giants-of-the-llm-world

- https://www.thoughtspot.com/data-trends/ai/large-language-models-vs-chatgpt

- https://blog.premai.io/the-tiny-llm-revolution-part1/

- https://www.computerworld.com/article/3697649/what-are-large-language-models-and-how-are-they-used-in-generative-ai.html

Subscribe

Select topics and stay current with our latest insights

- Functions