- Industries

Industries

- Functions

Functions

- Insights

Insights

- Careers

Careers

- About Us

- Technology

- By Omega Team

Fine-tuning is a crucial concept in machine learning, especially in the context of transfer learning. It refers to the process of taking a pre-trained model, which has already learned from a large dataset, and adjusting it for a specific task or dataset. This technique allows machine learning practitioners to leverage the power of large models trained on vast amounts of data without needing to train a model from scratch, which can be computationally expensive and time-consuming. By fine-tuning, the model can adapt its knowledge to the nuances of a new problem, leading to better performance with fewer resources. It also helps in reducing the time and effort required for training while maintaining high accuracy. Fine-tuning is widely used in fields such as natural language processing, computer vision, and speech recognition, where pre-trained models are readily available and highly effective.

What is Fine-Tuning?

Fine-tuning is the practice of modifying a pre-trained model’s parameters to better suit a specific task. The process involves training the model for a few additional epochs on a smaller, task-specific dataset. The idea is to retain the general knowledge the model has acquired from its initial training, while adapting it to perform better on a particular task or set of data. This allows for more efficient model development, as it reduces the need for starting from scratch and leverages the strengths of pre-trained models.

Fine-tuning is often used in deep learning models, particularly in fields like computer vision, natural language processing (NLP), and speech recognition. It allows practitioners to take advantage of large datasets and computationally expensive training processes without repeating them. By focusing on task-specific adjustments, fine-tuning ensures that the model can achieve high accuracy with fewer resources and in less time. This makes it an essential technique for applying machine learning in real-world scenarios where both efficiency and performance are crucial.

Why is Fine-Tuning Important?

Fine-tuning allows practitioners to use models that have already been trained on large and diverse datasets, such as ImageNet for computer vision or large corpora of text for NLP. These models have learned general features and representations that can be applied to a wide variety of tasks. Fine-tuning them for a specific task helps to:

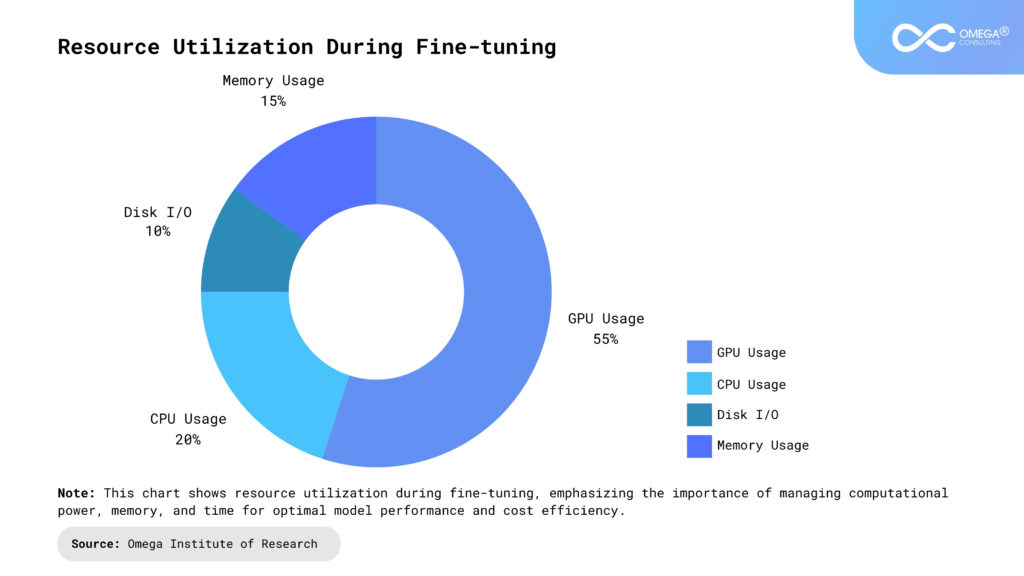

Reduce Training Time: Since the model has already learned general features, the time required to fine-tune it on a smaller dataset is significantly reduced compared to training from scratch. This can be particularly valuable when working with limited computational resources or when quick deployment is needed. Additionally, this time-saving benefit allows teams to iterate faster and experiment with different datasets or configurations without the burden of long training cycles. As a result, businesses can bring machine learning solutions to market more quickly, staying ahead in competitive environments.

Improve Accuracy: Fine-tuning allows the model to adapt its learned features to the nuances of the specific dataset, leading to better performance. By adjusting the model’s parameters to the new data, it can fine-tune its decision boundaries and improve prediction accuracy. This makes fine-tuning particularly beneficial in cases where the dataset differs from the one used in the pre-training phase, ensuring that the model remains relevant and effective. As a result, fine-tuned models can achieve higher precision, even in highly specialized applications.

Leverage Pretrained Knowledge: By starting with a model that has already learned valuable patterns, fine-tuning helps avoid the need to collect and label massive amounts of data for training a model from scratch. This makes it possible to apply powerful models to specialized tasks with minimal effort and cost. Moreover, this approach reduces the reliance on large-scale data collection, which can be time-consuming and expensive. Fine-tuning provides an efficient way to harness state-of-the-art models while maintaining a focus on task-specific performance without incurring high data preparation costs.

How Does Fine-Tuning Work?

Fine-tuning involves adapting a pre-trained model to a specific task by training it on a smaller, task-specific dataset. The model’s parameters are adjusted for a few additional epochs, while most of the pre-trained weights remain unchanged. This allows the model to retain general knowledge while improving its performance on the new task. Fine-tuning is efficient, requiring less data and computational resources than training a model from scratch.

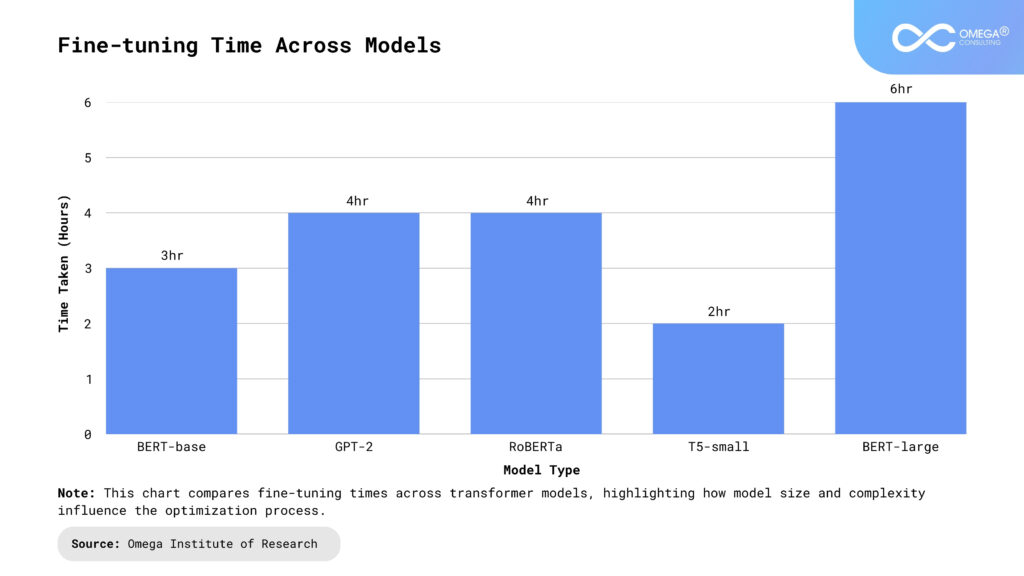

Choose a Pre-trained Model: The first step in fine-tuning is selecting a pre-trained model that has been trained on a large dataset. For instance, in computer vision, models like ResNet, VGG, and Efficient Net are often used, while in NLP, models like BERT, GPT, and T5 are popular choices. These models have been trained on vast amounts of data and have learned rich feature representations. Selecting the right model depends on the similarity between the pre-trained model’s original task and the new task.

Adapt the Model to the New Task

Once a pre-trained model is selected, the next step is to modify the model’s architecture to suit the specific task. This could involve:

- Replacing the Output Layer: The output layer of the pre-trained model is often specific to the original task (e.g., 1000 classes in ImageNet). For fine-tuning, this layer is replaced with a new one that matches the number of classes or output types for the new task. This ensures that the model can output the correct predictions for the new task.

- Freezing Layers: In some cases, the initial layers of the model (which capture general features like edges and textures in images) may be frozen, meaning their weights are not updated during fine-tuning. Only the later layers, which are more task-specific, are trained. This helps retain the general features learned during the pre-training phase while adapting the model to the new task.

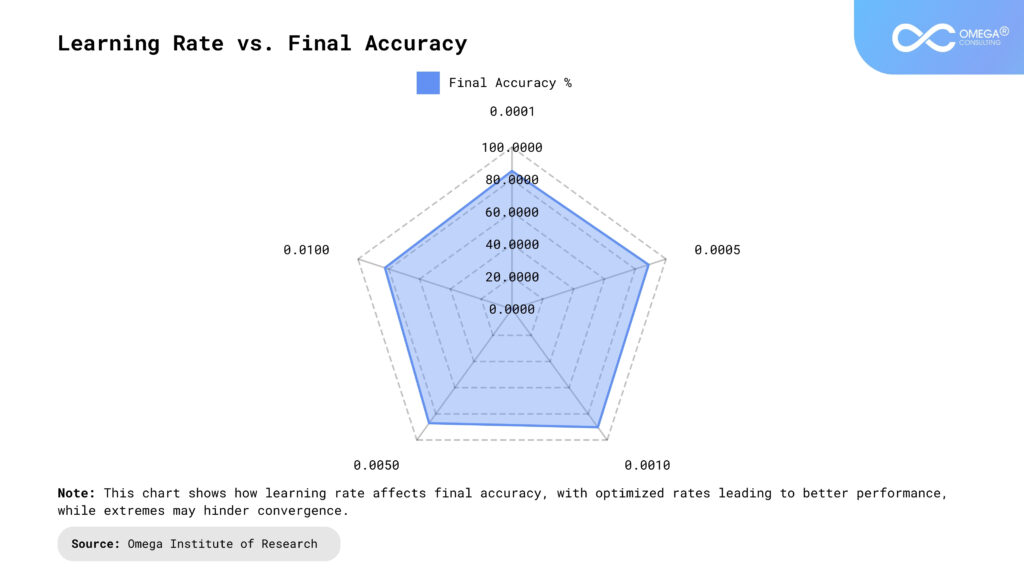

Fine-Tune the Model: Fine-tuning involves training the modified model on the new task’s dataset. However, instead of starting from random weights, the model begins with the pre-trained weights, allowing it to build upon the knowledge it already has. Fine-tuning is typically done with a lower learning rate to prevent the model from “forgetting” the general features learned during the initial training phase. This gradual adjustment helps the model refine its understanding of the new task without disrupting its pre-existing knowledge.

Evaluate and Adjust: After fine-tuning, the model is evaluated on a validation set. If performance is not satisfactory, adjustments can be made, such as unfreezing more layers, adjusting the learning rate, or augmenting the dataset. This iterative process helps refine the model’s performance and ensures it generalizes well to unseen data.

Fine-Tuning Techniques

There are several techniques for fine-tuning, depending on the task and the model. Some of the most common methods include:

Feature Extraction: In feature extraction, the pre-trained model is used as a fixed feature extractor. The weights of the model are frozen, and only the output from one of the layers is used as input to a new classifier (e.g., a logistic regression or support vector machine). This method is useful when the new dataset is small and the pre-trained model already provides useful features. It allows the model to focus on learning how to best use the extracted features for the new task.

Unfreezing Layers: In this approach, the earlier layers of the model are frozen, while the later layers are unfrozen and fine-tuned. This method is useful when the new dataset is somewhat different from the original dataset, but the general features learned by the pre-trained model still provide a strong foundation. Fine-tuning only the later layers allows the model to adapt to the specifics of the new task while maintaining the general features learned in the earlier layers.

Gradual Unfreezing: Gradual unfreezing is a more advanced technique where the layers are unfrozen one by one, starting from the last layer. This allows the model to fine-tune gradually, preventing overfitting and improving generalization. By unfreezing layers incrementally, the model can slowly adjust to the new data without disrupting its existing knowledge, ensuring a smoother transition to the new task.

Learning Rate Schedules: Using a learning rate schedule during fine-tuning can help prevent the model from overshooting the optimal weights. Techniques like learning rate warm-up (starting with a low learning rate and gradually increasing it) and learning rate annealing (reducing the learning rate as training progresses) are often employed. These strategies help the model converge more effectively and avoid the pitfalls of using a fixed learning rate throughout the entire fine-tuning process.

Challenges in Fine-Tuning

While fine-tuning offers significant advantages, there are several challenges that practitioners must navigate:

Overfitting: Fine-tuning on a small dataset can lead to overfitting, where the model becomes too tailored to the training data and performs poorly on unseen data. This occurs because the model may learn noise or irrelevant patterns from the limited data. To mitigate overfitting, techniques like data augmentation, dropout, and early stopping can be used. These techniques help ensure that the model generalizes well and doesn’t memorize the training data, thus improving its performance on new, unseen data. Additionally, cross-validation can be used to monitor the model’s generalization during training.

Catastrophic Forgetting: If too many layers are unfrozen and the model is trained too aggressively, it can forget the useful features learned from the original task, a phenomenon known as catastrophic forgetting. This is particularly problematic when transferring knowledge from one domain to another. To prevent this, a low learning rate and gradual unfreezing of layers are recommended. This approach allows the model to adapt to the new task without losing its general capabilities. Furthermore, techniques like regularization and incremental learning can help maintain a balance between the new and old knowledge.

Domain Shift: When the new dataset is significantly different from the original dataset, the model may struggle to adapt to the new task. This is known as domain shift, and it can lead to poor performance when the model encounters data that is not representative of its training set. In such cases, additional techniques like domain adaptation or using domain-specific pre-trained models can help. These strategies ensure that the model can handle the differences between the original and new data domains effectively. Furthermore, fine-tuning the model with a mix of data from both domains can improve its ability to generalize across diverse datasets.

Applications of Fine-Tuning

Fine-tuning has been successfully applied in various domains, including:

Computer Vision: In computer vision, fine-tuning pre-trained models on specific datasets, such as medical imaging or satellite imagery, has led to significant improvements in performance. For example, a model trained on ImageNet can be fine-tuned for tasks like object detection or facial recognition. Fine-tuning allows the model to adjust to the specific characteristics of the new images and improve its accuracy. This enables the model to better recognize subtle differences and adapt to specialized tasks like medical scans or satellite image analysis.

Natural Language Processing (NLP): In NLP, fine-tuning large language models like BERT, GPT, and T5 has revolutionized tasks like text classification, named entity recognition (NER), and sentiment analysis. Fine-tuning allows these models to adapt to specific domains, such as legal or medical text. By fine-tuning on domain-specific data, the model understands the context and terminology, leading to more precise results in specialized tasks like medical diagnosis or legal document analysis.

Speech Recognition: In speech recognition, pre-trained models like DeepSpeech can be fine-tuned for specific accents, languages, or industries. Fine-tuning allows these models to recognize speech with higher accuracy, even in noisy environments. This adaptation improves transcription quality and robustness, especially in specialized fields like customer service or healthcare, where domain-specific vocabulary and accents are prevalent.

Future of Fine-Tuning

As machine learning models continue to grow in size and complexity, fine-tuning will remain a crucial technique for adapting pre-trained models to specific tasks. Future advancements may include:

Few-Shot Learning: Fine-tuning models with minimal data, leveraging advances in few-shot and zero-shot learning, is revolutionizing the way machine learning models can be applied. These techniques will make it possible to fine-tune models even with limited labeled data, expanding the applicability of fine-tuning to new domains. By using only a few examples, models can learn to generalize and perform well on tasks they have never encountered before. This is particularly useful for applications in fields where labeled data is scarce, such as rare disease diagnosis or niche language translation, making fine-tuning a more versatile tool for various industries.

Meta-Learning: Fine-tuning models that can adapt to new tasks with minimal data improves the generalization capabilities of models, a concept central to meta-learning. Meta-learning will enable models to learn how to learn, allowing them to fine-tune more efficiently across a wide range of tasks. This approach allows models to quickly adapt to new environments or tasks with fewer examples, significantly reducing the time and data needed for training. It also helps improve the robustness of models, making them more versatile and capable of handling tasks that require a high degree of adaptability, such as personalized recommendations or autonomous decision-making.

Automated Fine-Tuning: The development of tools and frameworks that automate the fine-tuning process is making it more accessible to non-experts. These tools will streamline the fine-tuning workflow and enable practitioners to quickly deploy models to new tasks with minimal effort. Automated fine-tuning systems can intelligently adjust hyperparameters, select the right datasets, and optimize the model’s performance without requiring in-depth expertise. This democratization of fine-tuning opens up opportunities for businesses and researchers to apply machine learning models to a broader range of applications, from automated customer support to real-time data analysis, without the need for specialized knowledge.

Conclusion

Fine-tuning is a powerful technique in machine learning that allows practitioners to take advantage of pre-trained models and adapt them to specific tasks, saving time and computational resources while improving model performance. By carefully selecting the right pre-trained model, adjusting the architecture, and fine-tuning the model on a smaller dataset, it is possible to achieve state-of-the-art results in various domains. This process allows the model to retain the general knowledge it has gained from large datasets, while refining its ability to tackle specialized tasks. As machine learning continues to evolve, fine-tuning will play an essential role in making powerful models more accessible and effective across a wide range of applications. It not only reduces the need for large amounts of labeled data but also opens up opportunities for more efficient use of resources, making machine learning more scalable and adaptable for businesses and researchers alike. Furthermore, fine-tuning enhances the potential for rapid prototyping and deployment, enabling quicker adaptation to changing needs and emerging challenges.

- https://www.ibm.com/think/topics/fine-tuning

- https://stackoverflow.blog/2024/10/31/a-brief-summary-of-language-model-finetuning/

- https://www.sciencedirect.com/science/article/pii/S0950584924001289

- https://medium.com/@prabhuss73/fine-tuning-ai-models-a-guide-c515bcd4b580

- https://datascientest.com/en/fine-tuning-what-is-it-what-is-it-used-for-in-ai

Subscribe

Select topics and stay current with our latest insights

- Functions