- Industries

Industries

- Functions

Functions

- Insights

Insights

- Careers

Careers

- About Us

- Technology

- By Omega Team

In the ever-evolving landscape of digital threats, the role of artificial intelligence (AI) is becoming increasingly pivotal. One of the most groundbreaking advancements in AI is the emergence of generative AI, which is redefining the boundaries of innovation across various sectors. Generative AI, with its ability to autonomously create new content and solutions, is now being harnessed to revolutionize cybersecurity. This article explores the unique capabilities and transformative potential of generative AI as a guardian of cybersecurity, delving into its applications, challenges, and future directions.

Understanding Generative AI

Generative AI represents a subset of artificial intelligence focused on generating new data, whether it be images, text, or even software code. Unlike traditional AI, which often analyzes existing data to find patterns, generative AI creates new possibilities by learning from data and then generating new, synthetic data that mirrors the patterns found in the training data.

Generative Adversarial Networks (GANs):

GANs consist of two neural networks—the generator and the discriminator—that operate in opposition to each other. The generator creates new data instances, while the discriminator evaluates them against real data. This adversarial process improves the generator’s ability to produce data that is indistinguishable from real data, making GANs powerful tools for generating realistic content, including deepfakes and synthetic data for training purposes.

Variational Autoencoders (VAEs):

VAEs work by encoding input data into a latent space and then decoding it back to its original form. This process allows VAEs to generate new data by sampling from the latent space, making them effective for applications that require generating new variations of the input data, such as image and video synthesis.

The Role of Generative AI in Cybersecurity

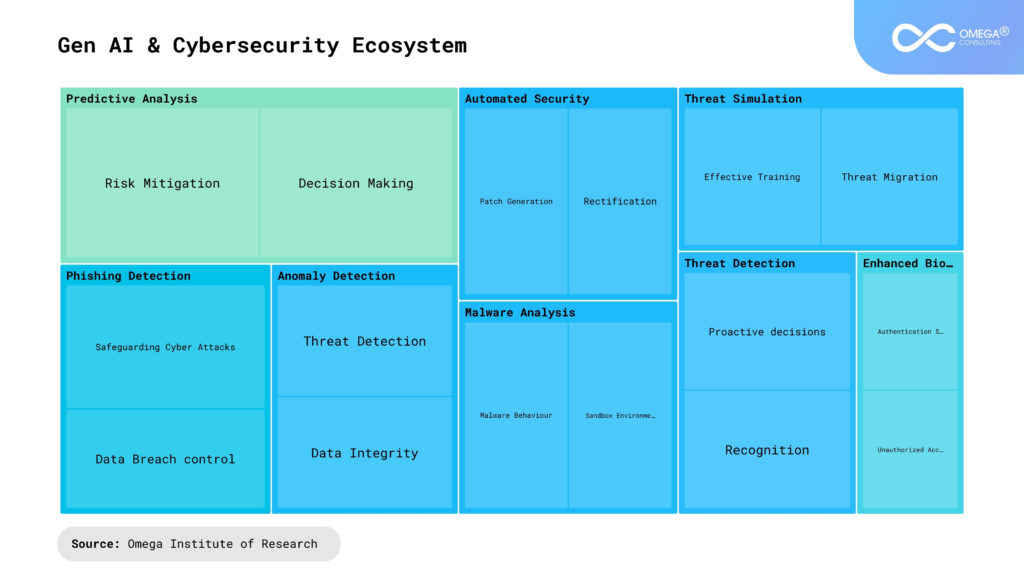

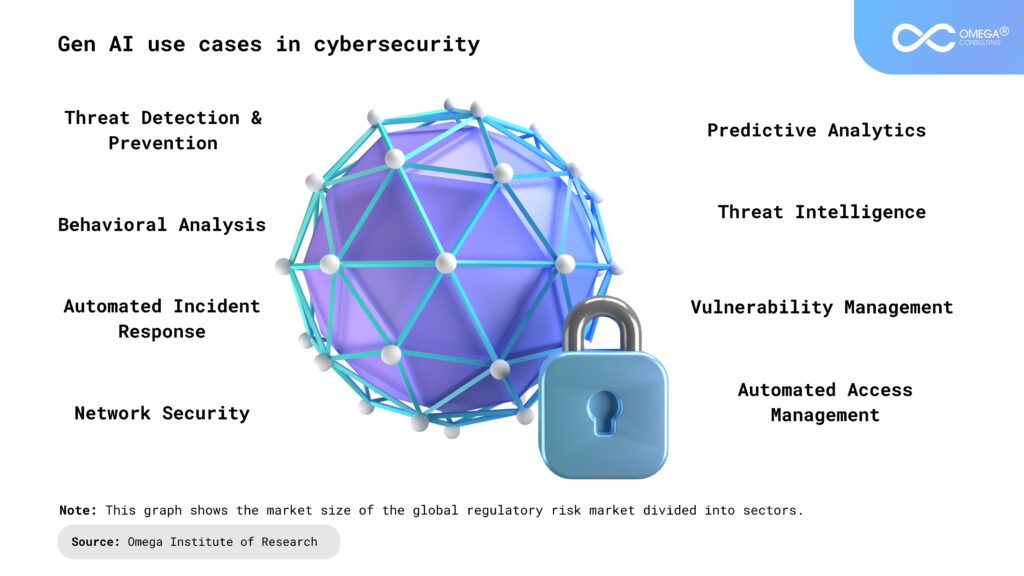

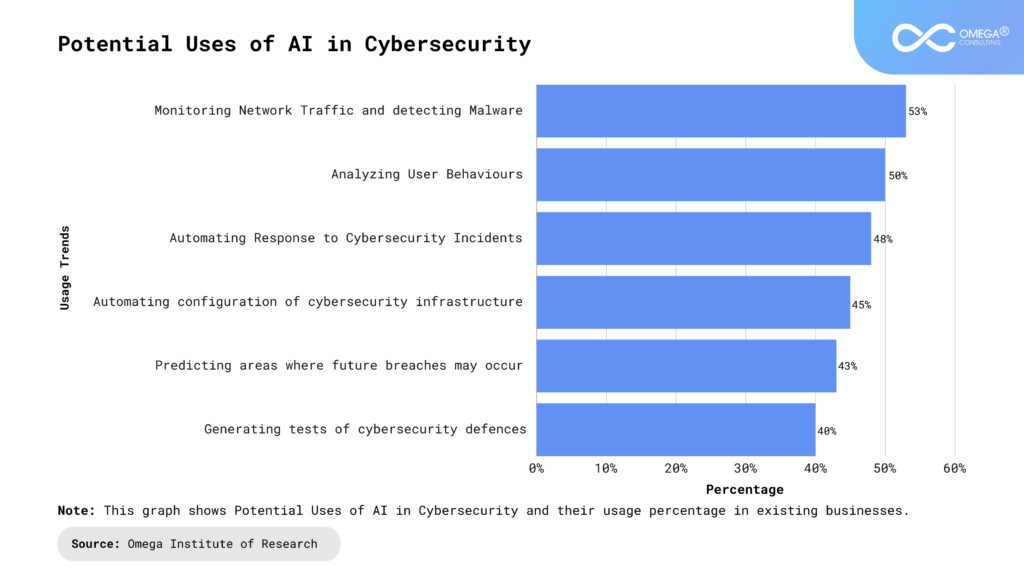

The integration of generative AI into cybersecurity leverages its unique ability to innovate and create, fundamentally changing how security systems operate. Here are some key areas where generative AI is poised to have a profound impact:

Advanced Threat Detection and Prevention

Generative AI enhances threat detection and prevention by moving beyond traditional, signature-based methods, which are often ineffective against novel threats.

- Behavioral Analysis: Generative AI models can continuously learn and adapt to the behavior of network traffic, user activity, and application usage, identifying anomalies that may indicate a cyber threat. This capability enables the detection of previously unknown threats by recognizing deviations from normal behavior patterns.

- Phishing Detection and Mitigation: Phishing attacks are a persistent threat to cybersecurity. Generative AI can analyze the structure, language patterns, and metadata of emails to detect phishing attempts. Moreover, AI-generated phishing simulations can be used to train employees, improving organizational resilience against such attacks.

Automated Incident Response

Speed and accuracy in incident response are crucial to minimizing damage from cyber attacks. Generative AI can automate and streamline various aspects of incident response.

- Real-time Threat Analysis: Generative AI can provide real-time analysis of incoming threat data, offering immediate insights into the nature and scope of an attack. This rapid assessment aids in quicker decision-making and more effective responses.

- Automated Remediation: Integrating generative AI with existing security tools can automate the remediation process, such as isolating affected systems, deploying patches, and removing malicious code. This reduces the response time and the burden on human operators.

Proactive Vulnerability Management

Generative AI can transform vulnerability management by identifying and mitigating potential security weaknesses before they can be exploited.

- Code Vulnerability Analysis: Generative AI can scrutinize source code for potential security vulnerabilities, learning to identify coding patterns that are prone to exploitation. This predictive capability helps developers address issues before they become critical.

- Automated Patching: Once vulnerabilities are identified, generative AI can assist in generating and testing patches. By automating this process, organizations can apply patches more swiftly and reliably, maintaining a stronger security posture.

Enhanced Cyber Threat Intelligence

Generative AI improves cyber threat intelligence by analyzing vast datasets from diverse sources and generating actionable insights that inform security strategies.

- Threat Hunting: Generative AI supports proactive threat hunting by generating hypotheses about potential threats and validating them against existing data. This proactive approach allows organizations to identify and neutralize threats before they escalate.

- Dark Web Surveillance: By monitoring the dark web, generative AI can identify emerging threats and trends, providing early warnings about new attack vectors and malicious activities. This intelligence is crucial for staying ahead of cybercriminals.

Challenges and Ethical Considerations

Generative AI’s potential to revolutionize cybersecurity is immense, but it also comes with significant challenges. Addressing these challenges is crucial to fully harnessing the capabilities of generative AI while ensuring its responsible and ethical use. Here are some key challenges and considerations:

Adversarial Attacks

- Sophisticated Threats: Just as generative AI can enhance cybersecurity, it can also be used by adversaries to create more sophisticated and harder-to-detect attacks, such as deepfakes and polymorphic malware.

- Model Manipulation: Adversarial attacks involve manipulating AI models to produce incorrect or misleading outputs. Attackers can craft inputs specifically designed to deceive AI systems, leading to false positives or negatives in threat detection.

Defense Mechanisms

- Incorporating adversarial training: Models exposed to adversarial examples during training, can enhance their robustness. However, this approach requires continuous updates and significant computational resources.

- Robust Model Architectures: Developing and implementing robust model architectures that are less susceptible to adversarial manipulation is a critical area of ongoing research.

Ethical and Legal Implications

- Training Data Requirements: Generative AI models require large datasets for training, which often include sensitive and personal information. Ensuring the privacy and security of this data is paramount.

- Compliance: Adhering to data protection regulations, such as GDPR and CCPA, is essential. This involves implementing strict data anonymization and encryption protocols to safeguard privacy.

Misuse Prevention

- Ethical Use: The ability of generative AI to create realistic synthetic data, including deepfakes, poses significant ethical risks. Ensuring that these technologies are used responsibly and not for malicious purposes is a major challenge.

- Regulatory Frameworks: Establishing comprehensive regulatory frameworks to govern the use of generative AI is necessary to prevent misuse. This includes guidelines for ethical AI development and deployment, as well as mechanisms for accountability and transparency.

Resource Intensity

Computational Costs

- High Resource Requirements: Training and deploying generative AI models are resource-intensive processes, requiring substantial computational power, memory, and storage.

- Environmental Impact: The environmental impact of high energy consumption in AI training processes is a growing concern. Developing more energy-efficient models and leveraging green computing technologies is essential.

Scalability

- Infrastructure Needs: Scaling generative AI solutions to meet the needs of large organizations without compromising performance is a significant challenge. This requires investment in scalable infrastructure and optimization techniques.

- Expertise: The deployment and maintenance of generative AI systems require specialized expertise. Ensuring that organizations have access to skilled professionals is crucial for successful implementation.

Security of AI Models

Model Integrity

- Tampering Risks: AI models themselves can be targets of cyber attacks. Ensuring the integrity and security of AI models is critical to prevent tampering and unauthorized access.

- Secure Deployment: Implementing secure deployment practices, including encryption, access controls, and regular audits, can help protect AI models from potential threats.

Continuous Monitoring

- Ongoing Vigilance: Continuous monitoring of AI models for signs of compromise or performance degradation is essential. This involves real-time threat detection and regular updates to maintain robustness against new threats.

Future Directions and Innovations

Future directions and innovations in the realm of generative AI hold significant promise for revolutionizing cybersecurity. Here’s a closer look at some key areas where advancements are anticipated:

Quantum Computing Integration

- Enhanced Processing Power: Integration of generative AI with quantum computing can unlock unprecedented processing power, enabling the development of more complex and efficient models.

- Speed and Efficiency: Quantum computing’s ability to perform parallel computations could significantly accelerate training and inference processes, leading to faster response times in cybersecurity tasks.

- Complexity Handling: Quantum computing’s unique capabilities can handle the complexity of cybersecurity data and models more effectively, allowing for deeper analysis and more accurate predictions.

Enhanced Human-AI Collaboration

- Interactive Decision Support: Future developments will likely focus on creating AI systems that provide interactive decision support to human analysts. These systems will augment human expertise by offering insights, suggestions, and explanations for cybersecurity incidents.

- Explainability and Transparency: Emphasis will be placed on enhancing the explainability and transparency of AI-generated insights, ensuring that human operators can understand and trust the recommendations provided by AI systems.

- User-Friendly Interfaces: User-friendly interfaces that facilitate seamless interaction between humans and AI systems will be developed, enabling effective collaboration in cybersecurity operations.

Continuous Learning and Adaptation

- Self-Improving Models: Research into self-improving AI systems will continue, with a focus on developing models that can autonomously update their knowledge base and adapt to new cyber threats.

- Dynamic Threat Response: AI systems capable of dynamically adjusting their defense strategies in response to evolving threats will be developed. These systems will continuously monitor and analyze cyber threat landscapes, proactively identifying and mitigating emerging risks.

- Robustness Against Adversarial Attacks: Future generative AI models will be designed to be more robust against adversarial attacks, incorporating techniques such as adversarial training and robust optimization to enhance their resilience.

Ethical and Responsible AI Development

- Ethical Guidelines and Regulations: There will be a growing emphasis on establishing ethical guidelines and regulatory frameworks for the development and deployment of generative AI in cybersecurity. These frameworks will address issues such as data privacy, fairness, transparency, and accountability.

- Responsible Use: Organizations and researchers will prioritize the responsible and ethical use of generative AI, taking proactive measures to prevent misuse and mitigate potential harms. This may include implementing safeguards, conducting risk assessments, and fostering transparency in AI development processes.

- Community Engagement: Collaboration between stakeholders, including government agencies, industry experts, academia, and civil society, will be essential in shaping ethical standards and ensuring the responsible adoption of generative AI technologies in cybersecurity.

Conclusion

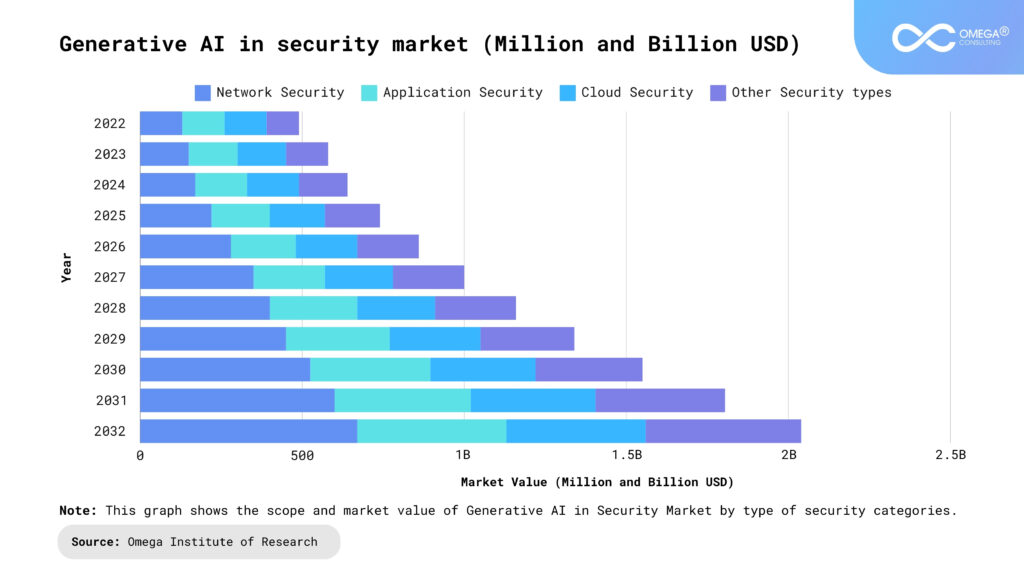

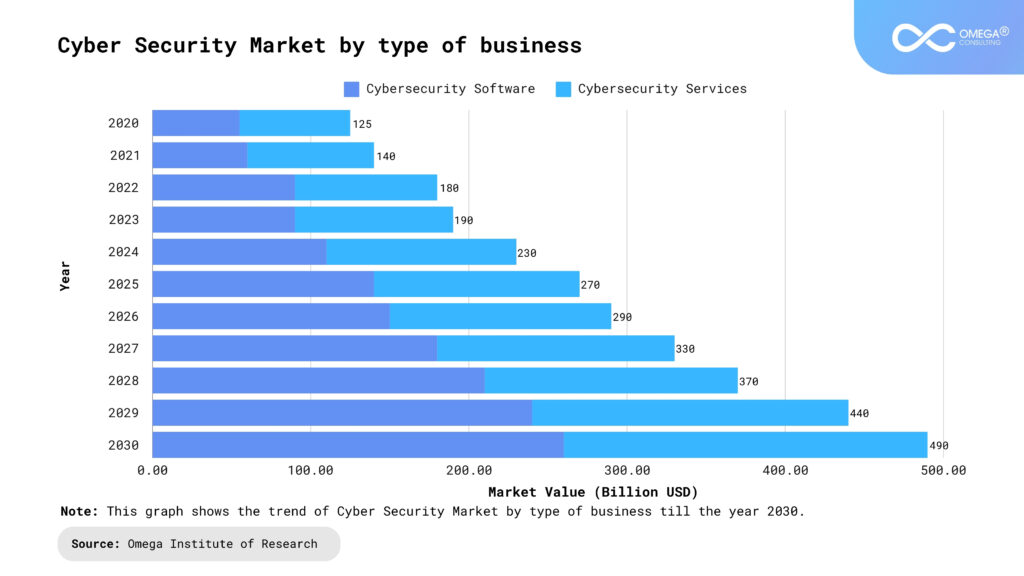

Generative AI is poised to become a cornerstone of modern cybersecurity, offering innovative solutions to detect, prevent, and respond to cyber threats. Its ability to analyze vast amounts of data, generate actionable insights, and automate complex tasks makes it a powerful tool for enhancing security.

However, the integration of generative AI into cybersecurity also presents challenges that must be carefully managed. Adversarial attacks, ethical considerations, and resource intensity are critical factors that need to be addressed to realize the potential of this technology fully.

As research and development continue, the future of generative AI in cybersecurity looks promising. By harnessing its capabilities and addressing its challenges, we can create a more secure digital landscape, safeguarding organizations and individuals from the ever-evolving threat of cyber attacks. Generative AI is not just a tool but a guardian of the cyber realm, ready to defend and innovate in the face of emerging threats.

The next frontier in cybersecurity is here, and it is powered by the creative and adaptive strength of generative AI. This technology promises to redefine digital defense, making the cyber world safer and more resilient against the relentless tide of cyber threats. As we continue to explore and develop this powerful tool, the role of generative AI as a cybersecurity guardian will only become more critical, paving the way for a new era of digital security.

- https://arxiv.org/abs/2303.12132

- https://www.cloverinfotech.com/services/cybersecurity-services/

- https://link.springer.com/chapter/10.1007/978-3-031-60215-3_13

- https://towardsdatascience.com/hands-on-anomaly-detection-with-variational-autoencoders-d4044672acd5?gi=8a2c490af08e

- https://onlinelibrary.wiley.com/doi/full/10.1002/smll.202205977

- https://medium.com/@prajeeshprathap/anomaly-detection-with-variational-autoencoders-vae-unveiling-hidden-patterns-42631834ffbf

Subscribe

Select topics and stay current with our latest insights

- Functions